Today we will explore how to enhance the performance of a poorly performing .NET REST API using dotTrace. Specifically, we will delve into an IO-bound problem and leverage dotTrace's profiling modes, sampling and timeline, to aid in identifying and resolving the bottlenecks.

dotTrace is a performance profiling tool developed by JetBrains for .NET applications. It helps developers diagnose performance issues in their software by providing detailed insights into how well the application is running. dotTrace can profile different types of .NET applications, including desktop, web, and server applications, and it supports various .NET frameworks.

To get started, you can either download and install dotTrace as a standalone or install the plugin inside Rider IDE or Visual Studio. You can also install both the standalone and plugin edition. We will use both in this example.

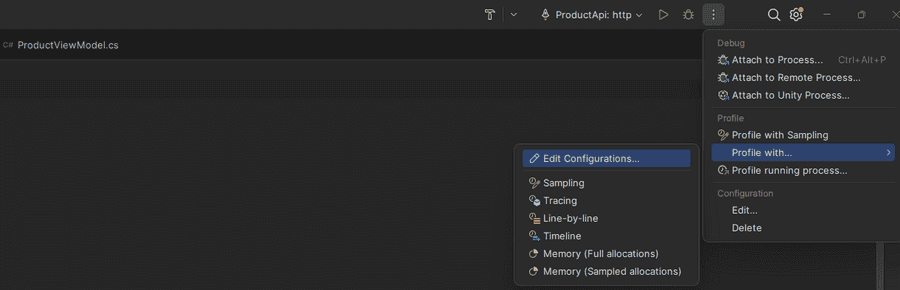

When the plugin is installed, we can see the following launch options inside the IDE.

Here we have the possibility to choose which profiling mode we should use:

Sampling Profiling: Takes periodic snapshots of the application's call stack to identify performance bottlenecks with minimal overhead. Best for initial, high-level performance assessment.

Timeline Profiling: Captures real-time events (CPU, memory, threads) over the application's execution timeline. Ideal for analyzing complex issues related to threading or memory spikes.

Tracing Profiling: Records detailed information on every function call, including execution times. Suited for in-depth analysis of specific functionalities, but with significant overhead.

Line-by-Line Profiling: Offers granular insight by tracking execution time and memory usage for individual code lines. Used for detailed optimization of specific code sections, highest overhead.

Note: Sampling Profiling and Timeline Profiling can attach to an already running process while Tracing and Line-by-Line cannot.

Here is some code from the application that we are going to profile and try to make better based on results from dotTrace:

using Dapper;

using Npgsql;

namespace ProductApi;

public interface IProductRepository

{

Task<List<Product>> GetAllProductsAsync();

Task<Dictionary<int, string>> GetAllCategoriesAsync();

Task<Dictionary<int, string>> GetAllSellersAsync();

Task<Dictionary<int, double>> GetAllDiscountsAsync();

}

public class ProductRepository : IProductRepository

{

private readonly string _connectionString;

public ProductRepository(string connectionString)

{

_connectionString = connectionString;

}

public async Task<List<Product>> GetAllProductsAsync()

{

await using var con = new NpgsqlConnection(_connectionString);

await con.OpenAsync();

const string sql = "SELECT id, name, price, category_id as CategoryId, seller_id as SellerId, discount_id as DiscountId FROM products";

var products = (await con.QueryAsync<Product>(sql)).ToList();

return products;

}

public async Task<Dictionary<int,string>> GetAllCategoriesAsync()

{

await using var connection = new NpgsqlConnection(_connectionString);

await connection.OpenAsync();

var categories = await connection.QueryAsync<Category>("SELECT id, name FROM categories");

return categories.ToDictionary(x => x.Id, y => y.Name);

}

public async Task<Dictionary<int, string>> GetAllSellersAsync()

{

await using var connection = new NpgsqlConnection(_connectionString);

await connection.OpenAsync();

var sellers = await connection.QueryAsync<Seller>("SELECT seller_id as SellerId, name FROM sellers");

return sellers.ToDictionary(x => x.SellerId, y => y.Name);

}

public async Task<Dictionary<int, double>> GetAllDiscountsAsync()

{

await using var connection = new NpgsqlConnection(_connectionString);

await connection.OpenAsync();

var sellers = await connection.QueryAsync<Discount>("SELECT discount_id as DiscountId, discount_amount as DiscountAmount FROM discount");

return sellers.ToDictionary(x => x.DiscountId, y => y.DiscountAmount);

}

}

using Microsoft.AspNetCore.Mvc;

namespace ProductApi;

[ApiController]

[Route("[controller]")]

public class ProductsController : ControllerBase

{

private readonly IProductRepository _productRepository;

public ProductsController(IProductRepository productRepository)

{

_productRepository = productRepository;

}

[HttpGet]

public async Task<IActionResult> Get()

{

var products = await _productRepository.GetAllProductsAsync();

var categories = await _productRepository.GetAllCategoriesAsync();

var sellers = await _productRepository.GetAllSellersAsync();

var discounts = await _productRepository.GetAllDiscountsAsync();

var response = products.Select(x => new ProductViewModel

{

Id = x.Id,

Category = categories[x.CategoryId],

SellerName = sellers[x.SellerId],

DiscountAmount = discounts[x.DiscountId],

Price = x.Price,

Name = x.Name

});

return Ok(response);

}

}

Before we begin with dotTrace, let's take a moment to plan our approach a bit. Keep in mind that diving deep into granular-level profiling will undoubtedly give us a wealth of insights but at the expense of greater demand on our application. It might also give us a mountain of details to wade through. It's also important to pinpoint the type of bottleneck we suspect is at play. Are we dealing with delays caused by IO operations, or is the CPU being pushed to its limits? Or maybe it's a mix of both?

Given that IO operations (in general) take more time compared to CPU operations, it can be good to look into that first.

Let's get a good overview by running the least overhead profile method Sampling Profiling. We can do this by first starting the application and then attaching to the process or launching the application in profile mode.

running:

curl -o /dev/null -s -w 'Total time: %{time_total}s\n' http://localhost:5162/products

output:

Total time: 4.483009s

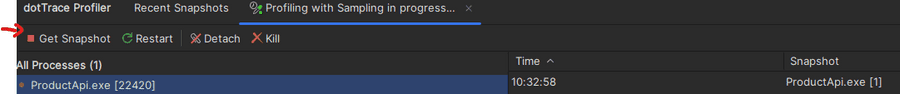

We then will collect the profiling data by clicking:

Then, navigate to the other tab to view all snapshots. By right-clicking on a snapshot entry, we can inspect the snapshot:

Here we have some options on how to open it:

- Open Snapshot - Inside the IDE

- Open in standalone dotTrace - Open it in the standalone edition (if it's installed)

- Opening the saved snapshot file - We can also browse the explorer. Every snapshot is automatically stored to the disk, under the folder %USERPROFILE%\dotTraceSnapshots[processName] [date]\

Let's choose Open in standalone dotTrace:

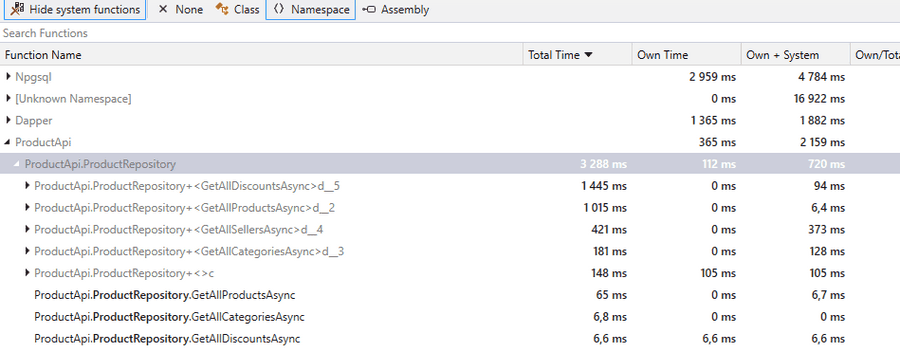

And then, let's open up the plain list option to go into a bit more detail:

In the plain list view, we can see that the most time is spent inside the packages Npgsql, Dapper, and our ProductRepository.

Before we attempt to improve it, let's use the timeline profiler to see how these database calls are done.

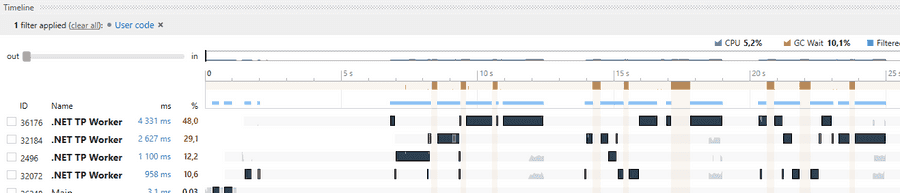

Running timeline profiling:

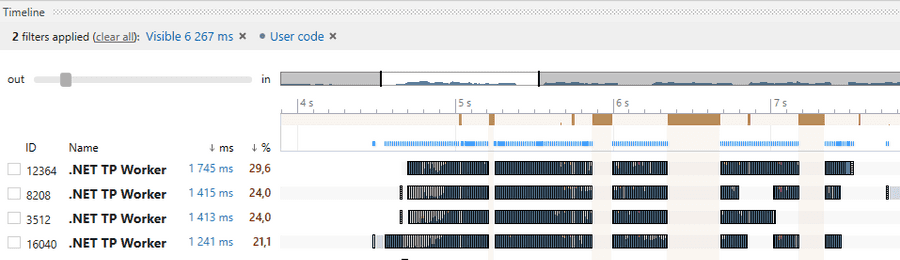

By filtering on the User Code subsystem, we can identify that there are four Thread Pool Workers that use the most time. We can also see that they run after each other in series. Inspecting the Plain List, we can identify that it's the calls:

var products = await _productRepository.GetAllProductsAsync();

var categories = await _productRepository.GetAllCategoriesAsync();

var sellers = await _productRepository.GetAllSellersAsync();

var discounts = await _productRepository.GetAllDiscountsAsync();

Because they are not dependent calls, let's run them in parallel instead.

var productsTask = _productRepository.GetAllProductsAsync();

var categoriesTask = _productRepository.GetAllCategoriesAsync();

var sellersTask = _productRepository.GetAllSellersAsync();

var discountsTask = _productRepository.GetAllDiscountsAsync();

await Task.WhenAll(productsTask, categoriesTask, sellersTask, discountsTask);

var products = await productsTask;

var categories = await categoriesTask;

var sellers = await sellersTask;

var discounts = await discountsTask;

Now let's profile it with the timeline again:

running:

curl -o /dev/null -s -w 'Total time: %{time_total}s\n' http://localhost:5162/products

output:

Total time: 2.941794s

It looks like it's a bit faster.

Inspecting timeline:

As we can see in the image above, the thread pool workers start all at the same time, increasing their efficiency.

But, 2.9 seconds is still too slow for a good user experience. Let's have a look at the overall design of the application.

We get the products and enrich them with data from categories, sellers, and discounts tables. Doing this with one call by joining the tables should be much more efficient.

Let's make the following changes:

public async Task<List<Product>> GetAllProductsAsync()

{

await using var con = new NpgsqlConnection(_connectionString);

await con.OpenAsync();

const string sql = @"

SELECT

p.id,

p.name,

p.price,

c.name as CategoryName,

s.name as SellerName,

d.discount_amount as DiscountAmount

FROM products p

INNER JOIN categories as c ON c.id = p.category_id

INNER JOIN sellers as s ON s.id = p.seller_id

INNER JOIN discounts as d ON d.id = p.discount_id

";

var products = (await con.QueryAsync<Product>(sql)).ToList();

return products;

}

[HttpGet]

public async Task<IActionResult> Get()

{

var products = await _productRepository.GetAllProductsAsync();

return Ok(products);

}

running:

curl -o /dev/null -s -w 'Total time: %{time_total}s\n' http://localhost:5162/products

output:

Total time: 1.658726s

Lets view it with timeline profiling again:

Ok, we can see that we are mostly using 1 thread pool worker, but it's more efficient because of the join. We can also note the CPU level is less than previously.

But 1.6 seconds is still quite a lot.

Inspecting the tables, we can see that about 3 million rows are fetched. This is excessive, let's do something about it. No user wants to inspect 3 million rows. We should add pagination to make it more manageable for the user and the server.

Lets do the following changes:

public async Task<List<ProductViewModel>> GetAllProductsAsync(int page, int pageSize)

{

await using var con = new NpgsqlConnection(_connectionString);

await con.OpenAsync();

// Calculate the number of rows to skip

var skip = (page - 1) * pageSize;

var sql = $@"

SELECT

p.id,

p.name,

price,

c.name as CategoryName,

s.name as SellerName,

d.discount_amount as DiscountAmount

FROM products p

INNER JOIN categories as c ON c.id = p.category_id

INNER JOIN sellers as s ON s.id = p.seller_id

INNER JOIN discounts as d ON d.id = p.discount_id

ORDER BY p.id

LIMIT {pageSize} OFFSET {skip}

";

var products = (await con.QueryAsync<ProductViewModel>(sql)).ToList();

return products;

}

[HttpGet]

public async Task<IActionResult> Get(int page = 1, int pageSize = 10)

{

var products = await _productRepository.GetAllProductsAsync(page, pageSize);

return Ok(products);

}

running:

curl -o /dev/null -s -w 'Total time: %{time_total}s\n' "http://localhost:5162/products?page=1&pageSize=10"

output:

Total time: 0.002866s

Perfect!

That's pretty much it for this post.

Happy coding!